Let me show you how you can combine PostgreSQL, Docker and DbUp to create pleasant and quick database development environment without installing anything (besides docker of course).

I won’t introduce you to docker or Postgres - you should have some basic knowledge in this area although I will follow you with every step. When it comes to DbUp it is a .NET Library that helps you deploy changes to your SQL database. It supports:

- SqlServer

- MySql

- SQLite

- PostgreSQL

- Oracle

- Firebird

The assumption around this library is straightforward. It creates a version table to keep track which scripts were executed and applies new ones incrementally. It embraces transitions instead of “state”. Thanks to this approach you can upgrade your databases without db downtime. Check the docs to find out more.

Ready? So let’s Go!

Step 1 - Run PostgresSQL in docker container

Let’s start with simple docker-compose.yml:

1version: '3.7'

2services:

3 db:

4 image: postgres

5 restart: always

6 environment:

7 POSTGRES_PASSWORD: Secret!Passw0rd

8 POSTGRES_USER: postgres

9 ports:

10 - 5432:5432

Having that file let’s just run command docker-compose up -d. Now we can verify if docker container with postgres is running on the default port with docker ps. You should see this:

1λ docker ps

2CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3a7446521a3dg postgres "docker-entrypoint.s…" 6 seconds ago Up 5 seconds 0.0.0.0:5432->5432/tcp postgres_db_1

So once we have postgres up and running we can play a little bit with it by connecting to the container using docker exec -it a74 bash (make sure to enter yours container id). After we enter interactie mode let’s run psql -U postgres. We can list databases using \l command or use sql to do whatever we wnat. As an example I will create random database.

1postgres=# CREATE DATABASE mytestdb;

2CREATE DATABASE

3postgres=# \l

4 List of databases

5 Name | Owner | Encoding | Collate | Ctype | Access privileges

6-----------+----------+----------+------------+------------+-----------------------

7 mytestdb | postgres | UTF8 | en_US.utf8 | en_US.utf8 |

8 postgres | postgres | UTF8 | en_US.utf8 | en_US.utf8 |

9 template0 | postgres | UTF8 | en_US.utf8 | en_US.utf8 | =c/postgres +

10 | | | | | postgres=CTc/postgres

11 template1 | postgres | UTF8 | en_US.utf8 | en_US.utf8 | =c/postgres +

12 | | | | | postgres=CTc/postgres

13(4 rows)

14

15postgres=# \q

16root@57368050ac99:/# exit

Use \q command to exit psql and of course ctr+d combination to leave container in detached mode.

Step 2 - Create DbUp Console application

Let’s create new netcore console app. You can use your IDE but I will stick with CLI for now. First navigate to the directory with your docker-compose.yml and run dotnet new console -o DbMigrator or any other name. Navigate to new project fodler and add two packages

dotnet add package dbup-coredotnet add package dbup-postgresql

then modify Program.cs:

1using System;

2using System.Linq;

3using System.Reflection;

4using DbUp;

5

6namespace DbMigrator

7{

8 class Program

9 {

10 static int Main(string[] args)

11 {

12 var connectionString =

13 args.FirstOrDefault()

14 ?? "Host=localhost;User Id=postgres;Password=Secret!Passw0rd;Database=crazy_database;Port=5432";

15 EnsureDatabase.For.PostgresqlDatabase(connectionString);

16 var upgrader = DeployChanges.To

17 .PostgresqlDatabase(connectionString)

18 .WithScriptsEmbeddedInAssembly(Assembly.GetExecutingAssembly())

19 .LogToConsole()

20 .Build();

21 var result = upgrader.PerformUpgrade();

22 if (!result.Successful)

23 {

24 Console.ForegroundColor = ConsoleColor.Red;

25 Console.WriteLine(result.Error);

26 Console.ResetColor();

27 return -1;

28 }

29 Console.ForegroundColor = ConsoleColor.Green;

30 Console.WriteLine(value: "Success!");

31 Console.ResetColor();

32 return 0;

33 }

34 }

35}

or Program.fs if you prefer F#, I do :)

1open System

2open System.Reflection

3open DbUp

4

5let logAndParseEngineResult (result: Engine.DatabaseUpgradeResult) =

6 match result.Successful with

7 | true ->

8 Console.ForegroundColor <- ConsoleColor.Green

9 Console.WriteLine("Success")

10 Console.ResetColor()

11 0

12 | false ->

13 Console.ForegroundColor <- ConsoleColor.Red

14 Console.WriteLine result.Error

15 Console.ResetColor()

16 -1

17

18[<EntryPoint>]

19let main argv =

20 let connectionString =

21 match argv |> Array.tryHead with

22 | Some connectionString -> connectionString

23 | None ->

24 "Server=localhost,1433;Initial Catalog=TravelServicesConsumer;User ID=sa;Password=Strong!Passw0rd;MultipleActiveResultSets=True;Connection Timeout=30;"

25 EnsureDatabase.For.SqlDatabase(connectionString)

26 DeployChanges.To.SqlDatabase(connectionString)

27 .WithScriptsEmbeddedInAssembly(Assembly.GetExecutingAssembly())

28 .LogToConsole().Build().PerformUpgrade()

29 |> logAndParseEngineResult

No magic here. Everything is according to dbup documentation. I made some minor changes as the main example on the docs is for sqlserver. I have also used EnsureDatabase... what is fine for local development but remember that for other environments you should create the database before with all necessary settings (collation, security, connlimit etc…).

Time to run the app! Let dbup create the crazy_database for us. Run dotnet run we should see this:

1λ dotnet run

2Master ConnectionString => Host=localhost;Username=postgres;Password=***************;Database=postgres;Port=5432

3Beginning database upgrade

4Checking whether journal table exists..

5Journal table does not exist

6No new scripts need to be executed - completing.

7Success!

We can connect to docker container again and list databases as described in previous point. Use \c dbname to connect to specific database. Works for me:

1postgres=# \c crazy_database

2You are now connected to database "crazy_database" as user "postgres".

Let’s add 2 basic sql scripts to create simple tables:

09032020_AddTable_Customer.sql

1CREATE TABLE Customers (

2 Id int,

3 LastName varchar(255),

4 FirstName varchar(255),

5 Address varchar(255)

6);

002_FillSampleData.sql

1INSERT INTO Customers VALUES

2(

3 1,

4 'Gerard',

5 'Thomas',

6 'Nowhere 22/11'

7),

8(

9 2,

10 'Newman',

11 'Oldman',

12 'Somwhere 2/12'

13)

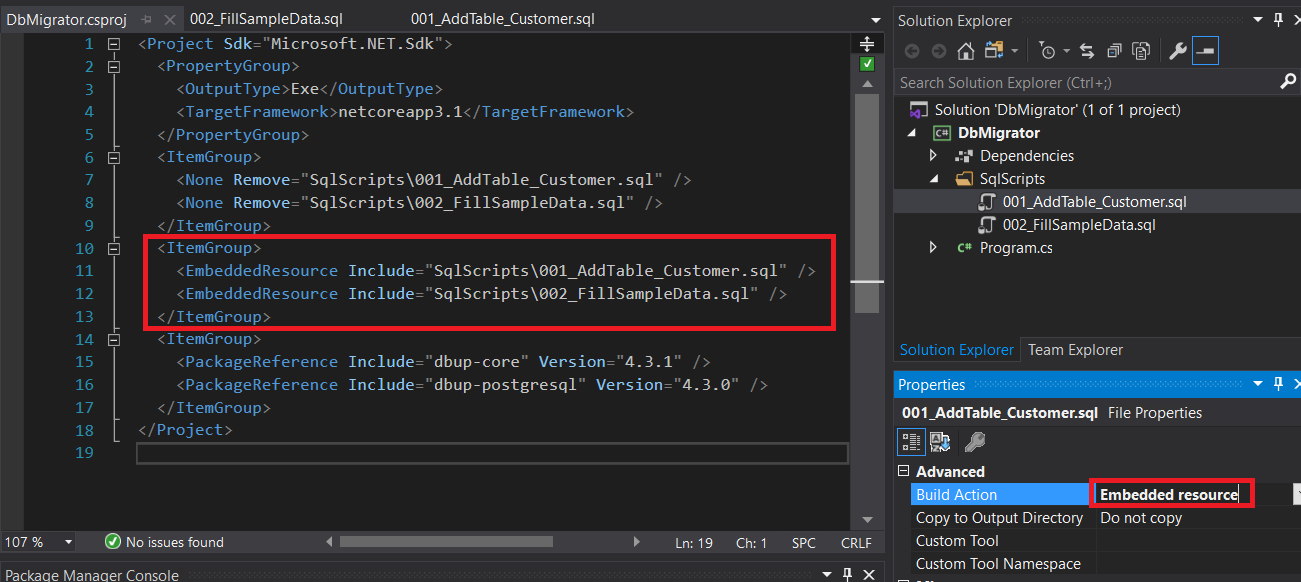

Set the script files as embedded ressources. You can do it in your IDE or in csproj. Example scripts looks like this:

Having those things let’s run the migrator again.

1λ dotnet run

2Master ConnectionString => Host=localhost;Username=postgres;Password=***************;Database=postgres;Port=5432

3Beginning database upgrade

4Checking whether journal table exists..

5Journal table does not exist

6Executing Database Server script 'DbMigrator.SqlScripts.001_AddTable_Customer.sql'

7Checking whether journal table exists..

8Creating the "schemaversions" table

9The "schemaversions" table has been created

10Executing Database Server script 'DbMigrator.SqlScripts.002_FillSampleData.sql'

11Upgrade successful

12Success!

Perfect! So we already have a postgres instance running in docker container and we are able to incrementally apply migrations using DbUp. Let’s see what is in the database;

1λ docker exec -it 43c bash

2root@43c7615a4146:/# psql -U postgres

3psql (12.2 (Debian 12.2-2.pgdg100+1))

4Type "help" for help.

5

6postgres=# \c crazy_database

7You are now connected to database "crazy_database" as user "postgres".

8crazy_database=# \dt

9 List of relations

10 Schema | Name | Type | Owner

11--------+----------------+-------+----------

12 public | customers | table | postgres

13 public | schemaversions | table | postgres

14(2 rows)

15

16crazy_database=# SELECT * FROM schemaversions;

17 schemaversionsid | scriptname | applied

18------------------+-------------------------------------------------+----------------------------

19 1 | DbMigrator.SqlScripts.001_AddTable_Customer.sql | 2020-03-03 22:39:44.720556

20 2 | DbMigrator.SqlScripts.002_FillSampleData.sql | 2020-03-03 22:39:44.760178

21(2 rows)

22

23crazy_database=#

Step 3 - Run migrations in docker

One may ask why? We already can run DbUp using dotnet CLI against dockerized postgres. This is a good start but when your app is growing and contains different services with different databases this really simplifies your life. Everything you have to do is docker-compose up and you are ready to go with all your databases + schema up to date! This is a game changer when you want to run integration-tests. It is still possible without DbUp being dockerized but the CI scripts are growing with more and more commands. With good docker-compose you simply don’t have to worry about that. Also when I write integration tests I tend to play with database causing some rubbish data. It is really easy to docker-compose down and docker-compose up and have everyting fresh. Multiply this act by few times per day and you can save some time for coffee!

We have two options here:

- Add more layers on top of postgres image. The layers will contain netcore and DbMibrator.

- Create other docker-image with netcore and DbMigrator. The container with DbMigrator will reach postgres apply migration and exit automatically.

I tend to use the second approach. Docker society (and the docker team) advises to not create monolithic Dockerfiles (so containing multiple tech things). Think for a while… this should make sense! You should be able to use postgres in your docker-compose by other services without waiting to some migrations to apply (for example to run migrations for other database. Or to spin up some 3rd party service which don’t need your migrations but needs postgres). Let’s get our hands dirty again.

Create Dockerfile in the DbMigrator project like the following:

1FROM mcr.microsoft.com/dotnet/core/sdk:3.1

2WORKDIR /build

3COPY DbMigrator.csproj ./

4RUN dotnet restore

5COPY . .

6RUN dotnet publish -o /publish

7WORKDIR /publish

8CMD ["sh", "-c", "dotnet DbMigrator.dll \"${DB_CONNECTION}\""]

It’s easy one. If your are not sure what is going on you should definetely check the docker docs. In short (number contains script line numbers):

- Use dotnet core sdk base image

- Switch to build directory

- Copy the csproj first

- Restore the packages

- Copy rest of the files into the container

- Obvious…

- Change the working directory to publish

- Set the default parameters which will be passed to running container and run command with shell (by involving shell we can make use of env variables).

Line 3-4 embrace docker layers caching so we don’t need to restore the packages each time we edit a DbMigrator source file.

Now it is time to update our compose-file.

1version: '3.6'

2services:

3 db:

4 image: postgres

5 restart: always

6 environment:

7 POSTGRES_PASSWORD: Secret!Passw0rd

8 POSTGRES_USER: postgres

9 POSTGRES_DB: crazy_database

10 ports:

11 - 5432:5432

12 db-migrations:

13 build:

14 context: DbMigrator/

15 dockerfile: ./Dockerfile

16 depends_on:

17 - db

18 environment:

19 DB_CONNECTION: "Host=db;User Id=postgres;Password=Secret!Passw0rd;Database=crazy_database;Port=5432"

The depends_on tells docker that even if we decide to run this command:

docker-compose up -d db-migrations

then it should run db container first as this is its upstream dependency.

DONE!

Let’s check if this works. Just run docker-compose up -d. I have this output:

1λ docker ps

2CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3d4546121a9ec postgres "docker-entrypoint.s…" 6 seconds ago Up 5 seconds 0.0.0.0:5432->5432/tcp postgres_db_1

Where is our migrator? Nowhere. There is no long-running process that docker can attach to (some kind of web listener on specific port). The Migrator was deployed and exited so the container lifecycle ended. Let’s check the compose logs using docker-compose logs.

1db-migrations_1 | Master ConnectionString => Host=db;Username=postgres;Password=***************;Database=postgres;Port=5432

2db-migrations_1 | Unhandled exception. System.Net.Sockets.SocketException (111): Connection refused

3db-migrations_1 | at Npgsql.NpgsqlConnector.Connect(NpgsqlTimeout timeout)

4db-migrations_1 | at Npgsql.NpgsqlConnector.RawOpen(NpgsqlTimeout timeout, Boolean async, CancellationToken cancellationToken)

5db-migrations_1 | at Npgsql.NpgsqlConnector.Open(NpgsqlTimeout timeout, Boolean async, CancellationToken cancellationToken)

6db-migrations_1 | at Npgsql.ConnectorPool.AllocateLong(NpgsqlConnection conn, NpgsqlTimeout timeout, Boolean async, CancellationToken cancellationToken)

7db-migrations_1 | at Npgsql.NpgsqlConnection.Open(Boolean async, CancellationToken cancellationToken)

8db-migrations_1 | at Npgsql.NpgsqlConnection.Open()

9db-migrations_1 | at PostgresqlExtensions.PostgresqlDatabase(SupportedDatabasesForEnsureDatabase supported, String connectionString, IUpgradeLog logger)

10db-migrations_1 | at PostgresqlExtensions.PostgresqlDatabase(SupportedDatabasesForEnsureDatabase supported, String connectionString)

11db-migrations_1 | at DbMigrator.Program.Main(String[] args) in /build/Program.cs:line 15

12db_1 | performing post-bootstrap initialization ... ok

That’s bad. What’s going on? Postgres didn’t make it to be up sooner than migrator so the connection was refused. Let’s add restart on-failure policy to compose yml file:

1services:

2 db:

3 image: postgres

4 restart: always

5 environment:

6 POSTGRES_PASSWORD: Secret!Passw0rd

7 POSTGRES_USER: postgres

8 ports:

9 - 5432:5432

10 db-migrations:

11 build:

12 context: DbMigrator/

13 dockerfile: ./Dockerfile

14 depends_on:

15 - db

16 environment:

17 DB_CONNECTION: "Host=db;User Id=postgres;Password=Secret!Passw0rd;Database=crazy_database;Port=5432"

18 restart: on-failure

Let’s run everything again. First let’s check for running containers:

1λ docker ps

2CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

Empty. Let’s compose up again:

1λ docker-compose up -d --build

2Starting postgres_db_1 ... done

3Starting postgres_db-migrations_1 ... done

Let’s enter containers bash and check the db schema:

1λ docker exec -it 30d bash

2root@30db9b19add6:/# psql -U postgres

3psql (12.2 (Debian 12.2-2.pgdg100+1))

4Type "help" for help.

5

6postgres=# \l

7 List of databases

8 Name | Owner | Encoding | Collate | Ctype | Access privileges

9-----------------+----------+----------+------------+------------+-----------------------

10 crazy_database | postgres | UTF8 | en_US.utf8 | en_US.utf8 |

11 postgres | postgres | UTF8 | en_US.utf8 | en_US.utf8 |

12 template0 | postgres | UTF8 | en_US.utf8 | en_US.utf8 | =c/postgres +

13 | | | | | postgres=CTc/postgres

14 template1 | postgres | UTF8 | en_US.utf8 | en_US.utf8 | =c/postgres +

15 | | | | | postgres=CTc/postgres

16(5 rows)

17

18postgres=# \c crazy_database

19You are now connected to database "crazy_database" as user "postgres".

20crazy_database=# \dt

21 List of relations

22 Schema | Name | Type | Owner

23--------+----------------+-------+----------

24 public | customers | table | postgres

25 public | schemaversions | table | postgres

26(2 rows)

27

28crazy_database=#

Done!

Troubles when using visual studio?

One thing you may run into when playing with docker:

Traceback (most recent call last):

File "site-packages\docker\utils\build.py", line 96, in create_archive

PermissionError: [Errno 13] Permission denied: '\\\\?\\C:\\postgres\\DbMigrator\\.vs\\DbMigrator\\v16\\Server\\sqlite3\\db.lock'

Just add .dockerignore file with this content:

1.vs

And you are good to go (we’ve just ignored some visual studio internal things from docker commands ie copy).

Github repo

Is here: https://github.com/marcingolenia/postgres-dbup

Summary

I hope you will enjoy this way of database development. You should be able to use SqlServer in very similar way - just change the db service in compose file, Program.cs of DbMigrator to work with SqlServer and of course connection string. Check my blog again for next post about some tips for working with migrations!